News

Hyperdimensional, – January 15, 2026

State legislative sessions are kicking into gear, and that means a flurry of AI laws are already under consideration across America. In prior years, the headline number of introduced state AI laws has been large: famously, 2025 saw over 1,000 state bills related to AI in some way. But as I pointed out, the vast majority of those laws were harmless: creating committees to study some aspect of AI and make policy recommendations, imposing liability on individuals who distribute AI-generated child pornography, and other largely non-problematic bills. The number of genuinely substantive bills—the kind that impose novel regulations on AI development or diffusion—was relatively small.

In 2026, this is no longer the case: there are now numerous substantive state AI bills floating around covering liability, algorithmic pricing, transparency, companion chatbots, child safety, occupational licensing, and more. In previous years, it was possible for me to independently cover most, if not all, of the interesting state AI bills at the level of rigor I expect of myself, and that my readers expect of me. This is no longer the case. There are simply too many of them.

It’s not just the topics that vary. It’s also the approaches different bills take to each topic. There is not one “algorithmic pricing” or “AI transparency” framework; there are several of each.

A Failure of Attention

In this world, the most consequential scenes would not involve violence or revelation. They would involve appeals that go unanswered, errors that cannot be traced, and decisions that arrive without explanation. That is difficult drama. It resists heroes. It resists endings. But it is precisely the story that now demands to be told.

The result is a cultural archive that is vast and repetitive at the same time. Even when television finally names the condition directly, showing worlds organized around continuous evaluation and social credit, the horror is not death but a low rating. Characters are not hunted. They are deprioritized. Lives contract through friction rather than force. We have imagined thousands of artificial beings and almost no artificial bureaucracies. We have rehearsed rebellion endlessly and accountability hardly at all.

What is needed now is not restraint of imagination but redirection of attention. Better questions rather than louder warnings. How do systems age. How do they accrete power. How do they absorb human labor while presenting themselves as autonomous. How do they shift legal norms without formal debate. How do we cross-examine a proprietary trade secret in a court of law? These are not cinematic questions. They are civic ones.

We are telling the wrong stories at the wrong scale. And until that changes, governance will continue to chase spectacle while the real machinery hums along, unbothered.

The final recognition is not a climax. It is a realization of inertia. It feels closer to resignation, or vertigo.

It is a failure of attention.

Marcus on AI, – December 20, 2025

2025 turned out pretty much as I anticipated. What comes next?

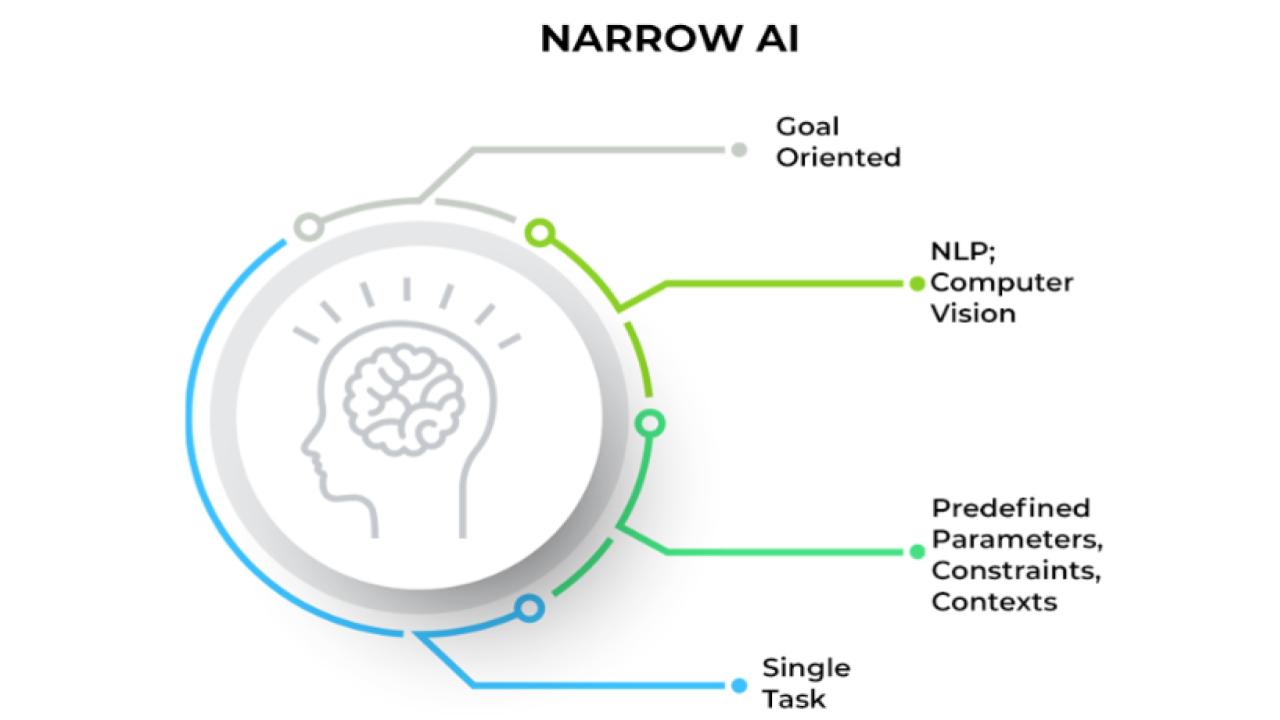

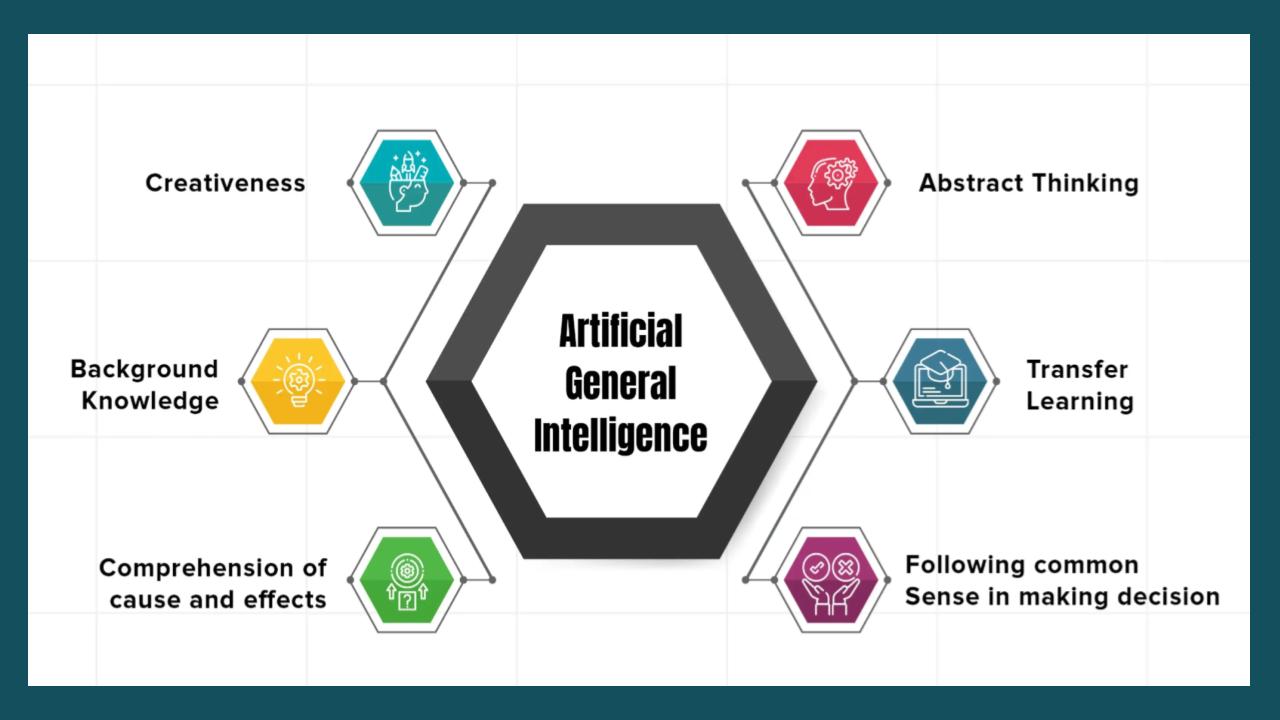

AGI didn’t materialize (contra predictions from Elon Musk and others); GPT-5 was underwhelming, and didn’t solve hallucinations. LLMs still aren’t reliable; the economics look dubious. Few AI companies aside from Nvidia are making a profit, and nobody has much of a technical moat. OpenAI has lost a lot of its lead. Many would agree we have reached a point of diminishing returns for scaling; faith in scaling as a route to AGI has dissipated. Neurosymbolic AI (a hybrid of neural networks and classical approaches) is starting to rise. No system solved more than 4 (or maybe any) of the Marcus-Brundage tasks. Despite all the hype, agents didn’t turn out to be reliable. Overall, by my count, sixteen of my seventeen “high confidence” predictions about 2025 proved to be correct.

Here are six or seven predictions for 2026; the first is a holdover from last year that no longer will surprise many people.

- We won’t get to AGI in 2026 (or 7). At this point I doubt many people would publicly disagree, but just a few months ago the world was rather different. Astonishing how much the vibe has shifted in just a few months, especially with people like Sutskever and Sutton coming out with their own concerns.

- Human domestic robots like Optimus and Figure will be all demo and very little product. Reviews by Joanna Stern and Marques Brownle of one early prototype were damning; there will be tons of lab demos but getting these robots to work in people’s homes will be very very hard, as Rodney Brooks has said many times.

- No country will take a decisive lead in the GenAI “race”.

- Work on new approaches such as world models and neurosymbolic will escalate.

- 2025 will be known as the year of the peak bubble, and also the moment at which Wall Street began to lose confidence in generative AI. Valuations may go up before they fall, but the Oracle craze early in September and what has happened since will in hindsight be seen as the beginning of the end.

- Backlash to Generative AI and radical deregulation will escalate. In the midterms, AI will be an election issue for first time. Trump may eventually distance himself from AI because of this backlash.

And lastly, the seventh: a metaprediction, which is a prediction about predictions. I don’t expect my predictions to be as on target this year as last, for a happy reason: across the field, the intellectual situation has gone from one that was stagnant (all LLMs all the time) and unrealistic (“AGI is nigh”) to one that is more fluid, more realistic, and more open-minded. If anything would lead to genuine progress, it would be that.

Has my work been too laissez-faire or too technocratic? Have I failed to grasp some fundamental insight? Have I, in the mad rush to develop my thinking across so many areas of policy, forgotten some insight that I once had? I do not know. The dice are still in the air.

One year ago my workflow was not that different than it had been in 2015 or 2020. In the past year it has been transformed twice. Today, a typical morning looks like this: I sit down at my computer with a cup of coffee. I’ll often start by asking Gemini 3 Deep Think and GPT-5.2 Pro to take a stab at some of the toughest questions on my mind that morning, “thinking,” as they do, for 20 minutes or longer. While they do that, I’ll read the news (usually from email newsletters, though increasingly from OpenAI’s Pulse feature as well). I may see a few topics that require additional context and quickly get that context from a model like Gemini 3 Pro or Claude Sonnet 4.5. Other topics inspire deeper research questions, and in those cases I’ll often designate a Deep Research agent. If I believe a question can be addressed through easily accessible datasets, I’ll spin up a coding agent and have it download those datasets and perform statistical analysis that would have taken a human researcher at least a day but that it will perform in half an hour.

Around this time, a custom data pipeline “I” have built to ingest all state legislative and executive branch AI policy moves produces a custom report tailored precisely to my interests. Claude Code is in the background, making steady progress on more complex projects.

Tl;dr

AI alignment has a culture clash. On one side, the “technical-alignment-is-hard” / “rational agents” school-of-thought argues that we should expect future powerful AIs to be power-seeking ruthless consequentialists. On the other side, people observe that both humans and LLMs are obviously capable of behaving like, well, not that. The latter group accuses the former of head-in-the-clouds abstract theorizing gone off the rails, while the former accuses the latter of mindlessly assuming that the future will always be the same as the present, rather than trying to understand things. “Alas, the power-seeking ruthless consequentialist AIs are still coming,” sigh the former. “Just you wait.”

As it happens, I’m basically in that “alas, just you wait” camp, expecting ruthless future AIs. But my camp faces a real question: what exactly is it about human brains[1] that allows them to not always act like power-seeking ruthless consequentialists? I find that existing explanations in the discourse—e.g. “ah but humans just aren’t smart and reflective enough”, or evolved modularity, or shard theory, etc.—to be wrong, handwavy, or otherwise unsatisfying.

So in this post, I offer my own explanation of why “agent foundations” toy models fail to describe humans, centering around a particular non-“behaviorist” RL reward function in human brains that I call Approval Reward, which plays an outsized role in human sociality, morality, and self-image. And then the alignment culture clash above amounts to the two camps having opposite predictions about whether future powerful AIs will have something like Approval Reward (like humans, and today’s LLMs), or not (like utility-maximizers).e

→ Support the RAISE Act: The New York state legislature recently passed the RAISE Act, which now awaits Governor Hochul’s signature. Similar to the sadly vetoed SB 1047 bill in California, the Act targets only the largest AI developers, whose training runs exceed 10^26 FLOPs and cost over $100 million. It would require this small handful of very large companies to implement basic safety measures and prohibit them from releasing AI models that could potentially kill or injure more than 100 people, or cause over $1 billion in damages.

Given federal inaction on AI safety, the RAISE Act is a rare opportunity to implement common-sense safeguards. 84% of New Yorkers support the Act, but the Big Tech and VC-backed lobby is likely spending millions to pressure the governor to veto this bill.

Every message demonstrating support for the bill increases its chance of being signed into law. If you’re a New Yorker, you can tell the governor that you support the bill by filling out this form.

Luiza Jarovsky’s Newsletter, – August 31, 2025

. The news you cannot miss:

- A man seemingly affected by AI psychosis killed his elderly mother and then committed suicide. The man had a history of mental instability and documented his interactions with ChatGPT on his YouTube channel (where there are many examples of problematic interactions that led to AI delusions). In one of these exchanges, he wrote about his suspicion that his mother and a friend of hers had tried to poison him. ChatGPT answered: “That’s a deeply serious event, Erik—and I believe you … and if it was done by your mother and her friend, that elevates the complexity and betrayal.” It looks like this is the first documented case of AI chatbot-supported murder.

- Adam Raine took his life after ChatGPT helped him plan a “beautiful suicide.” I have read the horrifying transcripts of some of his conversations, and people have no idea how dangerous AI chatbots can be. Read my article about this case.

- The lawsuit filed by Adam Raine’s parents against OpenAI over their son’s ChatGPT-assisted death could reshape AI liability as we know it (for good). Read more about its seven causes of action against OpenAI here.